GenAI for DAM: first look at Adobe Firefly

kicking off a new series of questions about and impact of Generative AI

As GenAI (or generative AI, or generative artificial intelligence, or robot art) has hit the creative world like a hurricane, some companies are trying to harness its power into a consumer-friendly product. Adobe has done so with Firefly, the GenAI tool now out of beta that they announced at Summit earlier this year and had been rolling out in beta to some clients. Once I found my golden ticket, I couldn’t wait to storm chase.

Adobe Firefly has 6 ways to use GenAi: Text to image, Generative fill, Text effects, Generative recolor, 3D to image, and Extend image. I have explored the first 2 and will check out the following 2 soon and the last 2 once available. Today we will discuss the tool DAM impact and the first generative type: Text to image.

Overview and DAM impact

My first look review is that the Gen AI technology powering Firefly is impressive, with room to get much better quickly. Hands, faces, scale, and understanding context over keywords are 4 areas I think will need to improve before this goes public. Similar responses have come from friends experimenting in Midjourney, DALL-E 2, and Bing Image Creator.

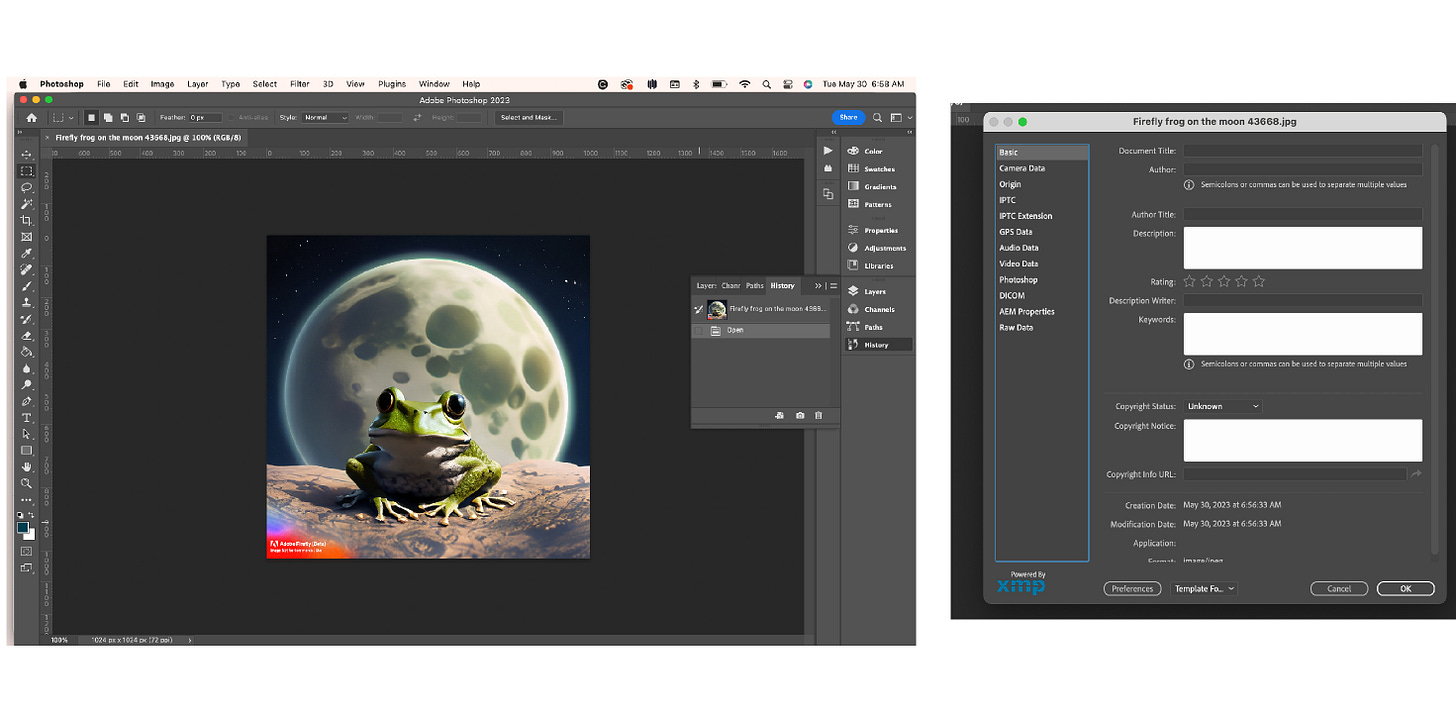

Here’s a quick example - the query was “frog on the moon.” I chose the “Art” content type, so we averted some of the uncanny valley results of “Photo.” This frog is not on our Earth-Moon anyway - unless it grew some similar-looking neighbors, so the context-over-keywords issue needs some work. But let’s say one of the images works - how will we include Generative AI images in our digital asset management systems? Adobe has been thinking about this too, along with other issues with Gen AI transparency. The application of the content credentials is a good start.

When I selected, downloaded, and opened the image in Photoshop, the image was indeed labeled. However, no author or other IPTC data was included. Maybe that will be part of the full commercial release. Since metadata rules our DAMs, the images will only be as valuable, searchable, and useful as the metadata they contain.

Generate tool 1: Text to Image

Like speaking to an art director, the more clearly you describe what you’re looking for, the better results you get.

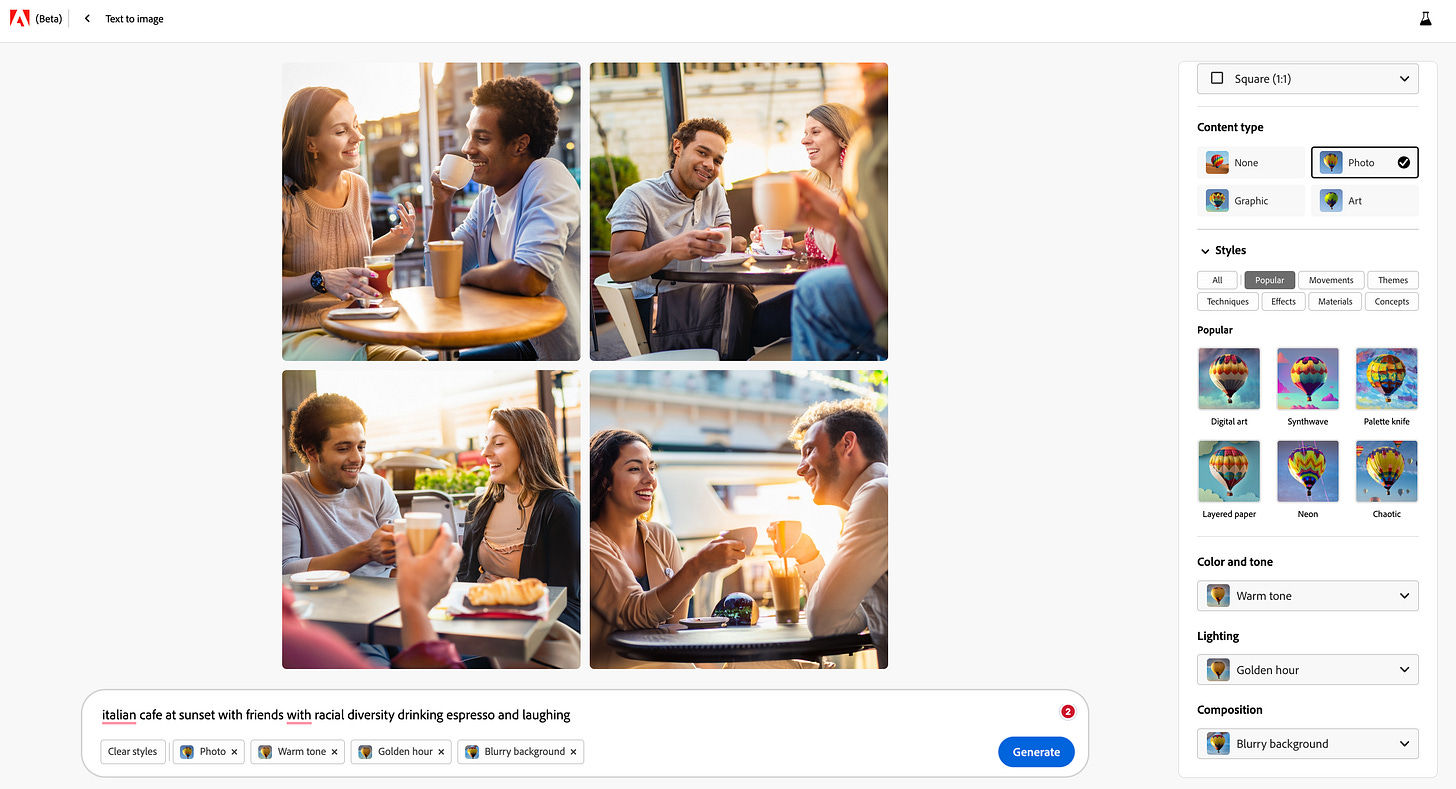

In this experiment, after clicking on the “Text to image” tile, I asked for 'an “Italian cafe at sunset with friends drinking espresso and laughing.” The first results:

The lighting, location, and subject placement were spot on, with the expected monster hands and faces. What I think could be Adobe Firefly’s differentiation in the market is its filtering.

I applied the “Warm tone” Color and tone, “Golden hour” Lighting, and “Blurry background” Composition filters and ran the generation again. Slightly different people and objects in the images, which I’m not sure is desirable (keeping the images as is but applying filters sounds like a useful option), but very photorealistic filtering. Maybe not a surprise from the people that brought us Photoshop.

They are also set up for feedback. As a Beta user, I can rate and report results. In this case, I gave the image a thumbs down.

The next time I refreshed, I noticed the results were much more Caucasian than I had been getting. Racial diversity has already been a huge issue in GenAI. I’m no expert but understand that the biases of the people who make the technology impact the results that AI delivers. Adobe is aware and seems focused on fighting inherent racial bias along with attribution and privacy concerns. Still, something to improve.

Editors and content creators can of course include “racial diversity” or other keywords to get those results now, but the responsibility should not be theirs alone.

And finally, I moved from “Photo” to Art” Content type and received some charming illustrations. You can also go much deeper with Styles filters like “Neon,” “Layered paper,” and more.

None are ready for our DAM (nor are they supposed to be used outside of idea generation yet), but like other AI tools, the results are dependent on both the technology and the queries and filters the user supplies. A new skill set for DAM professionals, creative teams, and other knowledge workers to master and use to add value to the ways we work.